Abstract

This project focuses on improving diffusion policy frameworks for robotic manipulation within the Push-T environment. The original diffusion policy face challenges in capturing longer dependencies. To overcome these limitations, we introduce transformer-based observation and action encoders, designed to effectively model both short and long-term temporal relationships across the whole trajectory. This approach demonstrates stable training convergence across 100 epochs, achieving higher task success rates and reducing the steps needed to complete tasks. The enhanced framework is validated using a custom PyMunk-based simulation environment, highlighting its ability to learn and perform complex tasks with improved stability and adaptability. These advancements hold promising potential for real-world applications in automation and assistive technologies.

Introduction

Robotic manipulation tasks require handling complex action spaces, generalizing across diverse scenarios and long trajectories, and maintaining temporal consistency. Traditional frameworks often fall short in addressing these challenges, limiting their applicability in real-world scenarios. Advancing robotics in fields like automation and assistive technologies requires overcoming these limitations. This project enhances the Diffusion Policy framework with Transformer-based observation and action encoders, enabling the robot to learn and execute complex tasks with improved stability and adaptability, making them more practical for real-world applications.

The key contributions are as follows:

- Scalable Architectural Improvements:Introduced transformer-based observation and action encoders to capture both short and long-term dependencies, effectively handling complex manipulation scenarios.

- Consistent Training Convergence: Achieved smooth and stable training across 100 epochs, with steadily decreasing training losses, showcasing the robustness of the improved framework.

- Improved Task Success in PushTEnv: Enhanced task performance by reducing the number of steps needed for completion and achieving higher task success rates in the custom simulation environment.

- Optimized Data Pipelines: Developed robust data normalization, sequence sampling, and padding techniques to ensure compatibility with transformer architectures and support stable training.

- Enhanced Simulation Environment: Upgraded the PushTEnv environment with higher render resolution, offering an 11x improvement in visual clarity to facilitate better result observation.

Background

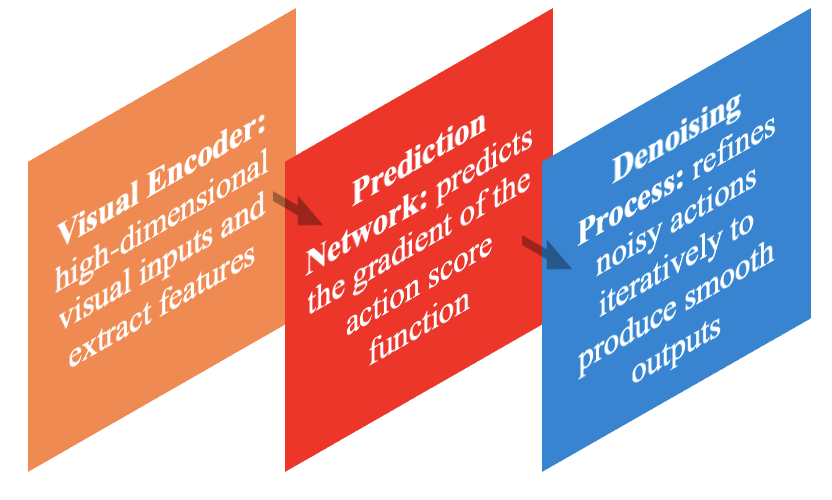

Chi et al. (2023) [1] introduced the Diffusion Policy framework, which extends Denoising Diffusion Probabilistic Models (DDPMs) to visuomotor policy learning. This framework models actions as sequential outputs, refining noisy samples into task-specific actions using a learned score function. While innovative, the original framework has limitations, including difficulty modeling long-term dependencies across sequential observations and actions.

Methodology

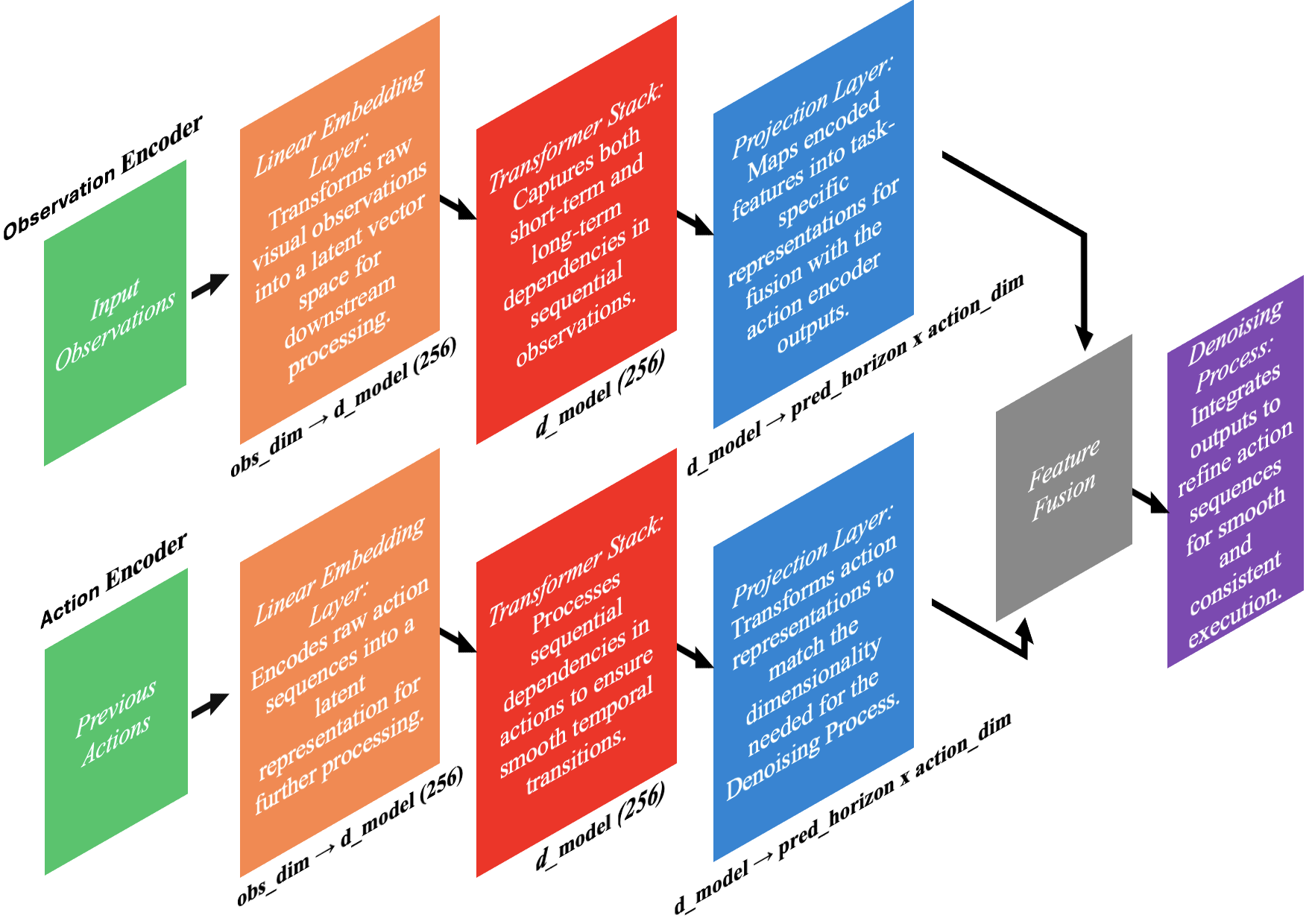

To address the above challenges, this project enhances the Diffusion Policy framework by integrating transformer-based observation and action encoders. The self-attention mechanism of transformers enables the modeling of both short- and long-term temporal dependencies, balancing temporal consistency (coherent actions) and responsiveness (quick adaptation to observations). Additionally, the enhanced architecture includes a custom denoising process that integrates outputs from the encoders for robust and consistent action generation.

Observation Encoder

Structure:- A Linear Embedding Layer transforms the input observation (obs_dim = 5) into a feature space of dimension d_model = 256.

- A Transformer Encoder Stack, consisting of 2 layers, captures both short-term and long-term temporal dependencies.

- A Projection Layer reshapes the encoded observation into a sequence representation for downstream tasks.

Dropout Rates: Rates between 0.1 and 0.3 were evaluated, with 0.1 found optimal for balancing regularization and model stability.

Action Encoder

Structure:- A Linear Embedding Layer maps actions (action_dim = 2) into the same 256-dimensional transformer-compatible feature space.

- A Transformer Encoder Stack, identical in design to the observation encoder, processes the action sequence.

- A Projection Layer outputs the predicted actions to match the required space (pred_horizon × action_dim).

Dropout Rates: Like the observation encoder, a 0.1 rate provided the best trade-off between regularization and model accuracy.

Both transformer architectures use feedforward layers with dim_feedforward = 2048 and nhead = 8 (as inspired by the "Attention Is All You Need" paper (Vaswani et al., 2017) [2]). These configurations were experimentally validated to balance performance and efficiency.

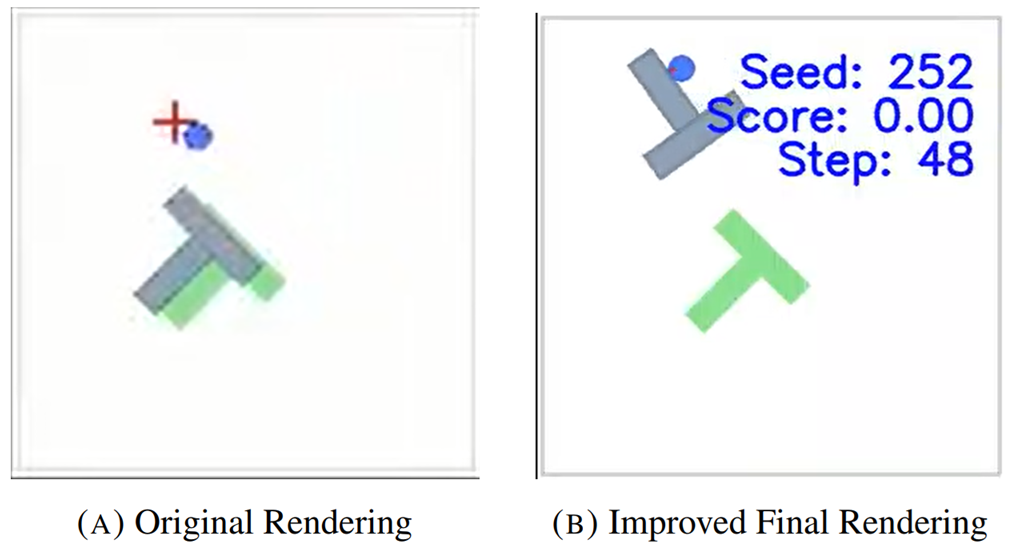

Simulation Environment Improvements

This project significantly improved the PushT simulation environment’s rendering quality. The render resolution was increased from 96 to 320, resulting in an 11-fold enhancement in visual clarity. This improvement facilitated better visual analysis, revealed unexpected behaviors, and highlighted how specific initial conditions challenged the agent, offering deeper insights into the model’s performance.

Experiments were conducted in the PushT simulation environment, chosen for its lightweight design and challenging dynamics. The evaluation closely aligned with the original study, using metrics such as score/success rate and the agent’s ability to reach the winning state within a maximum of 200 steps.

For this imitation learning task, we utilized the original training dataset provided by the authors of the original architecture to ensure a fair comparison. The dataset is available at the following link: https://drive.google.com/file/d/1KY1InLurpMvJDRb14L9NlXT_fEsCvVUq/view

Training was performed with three random seeds (1000, 2000, 3000), and each pretrained model was evaluated across 50 unique initial configurations, totaling 150 evaluations.

Results

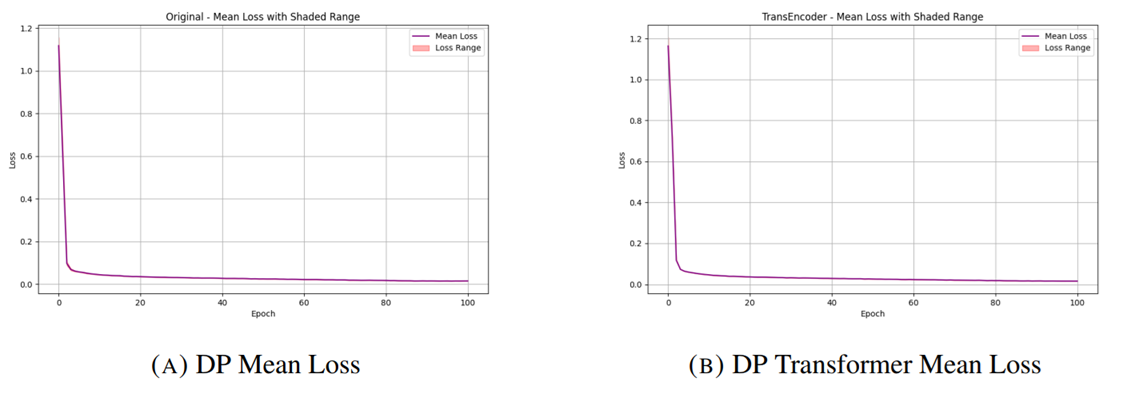

The performance of the enhanced DP Transformer architecture compared to the original Diffusion Policy is demonstrated in thevideo at the start of the page. Below, we analyze the results in terms of training performance, inference performance, and computational efficiency. In the following plots, DP refers to the original Diffusion Policy, while DP Transformer (or TransEncoder, as labeled in some plot titles) represents our updated model incorporating Transformer layers for observation and action spaces. The shaded areas in the plots indicate the corresponding standard deviations.

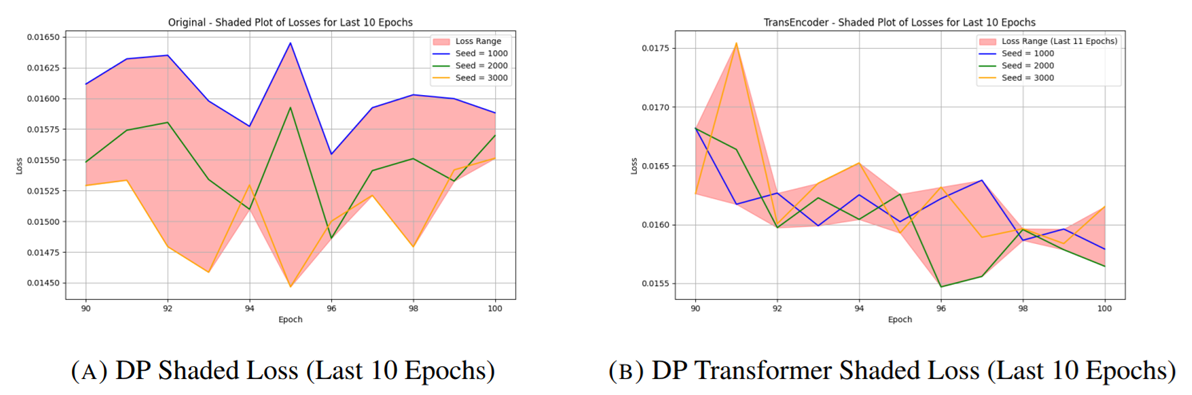

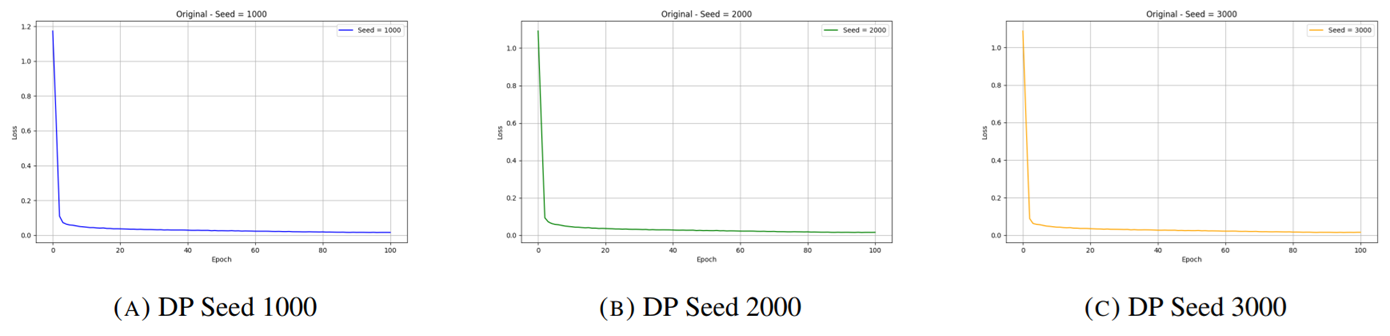

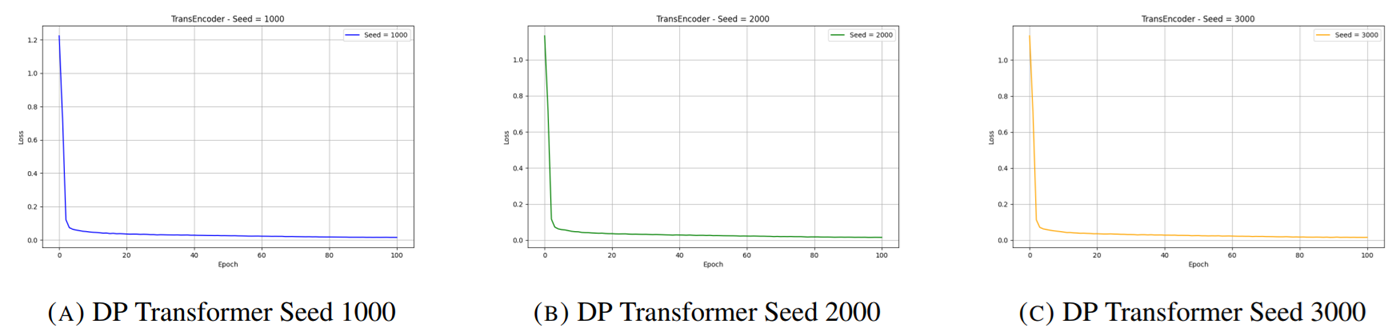

Training Performance: Both the Diffusion Policy (DP) and DP Transformer architectures demonstrated consistent convergence over 100 epochs (Figures 4a and 4b). The DP Transformer exhibited more stable learning curves, with a lower variance between seeds. Key observations include:

- Overall Trends: The final loss values for both models converged to similar ranges (~0.015), showing comparable optimization capabilities. However, the DP Transformer demonstrated reduced variance, indicating more robust training dynamics (Figures 5a and 5b).

- Early Training Phase: During the first 10 epochs, the DP Transformer showed faster convergence compared to the original DP. Its narrower standard deviation band during this phase reflected more consistent behavior across different seeds.

- Late Training Phase: In the final 10 epochs, both models stabilized, but the DP Transformer maintained tighter clustering of loss curves across seeds, which further highlighted its ability to produce stable and reliable performance during training.

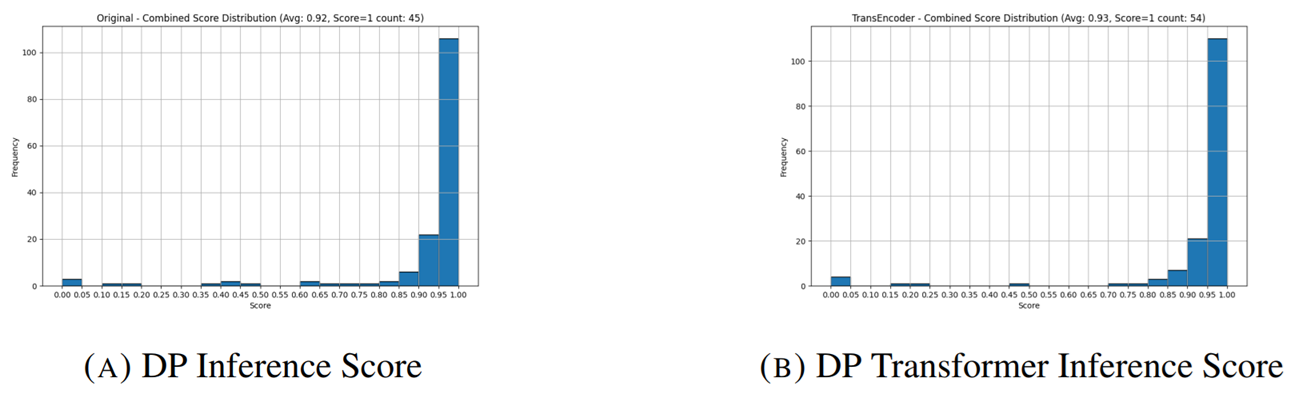

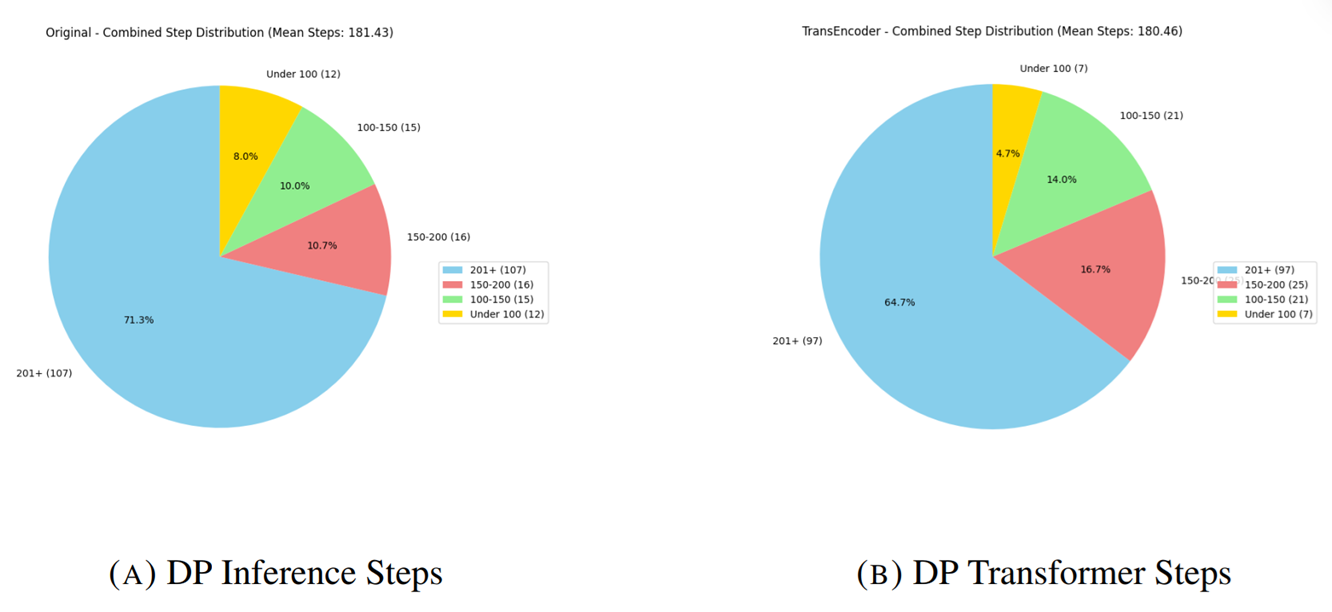

Inference Performance: The DP Transformer outperformed the original DP model during inference across several metrics. Key insights include:

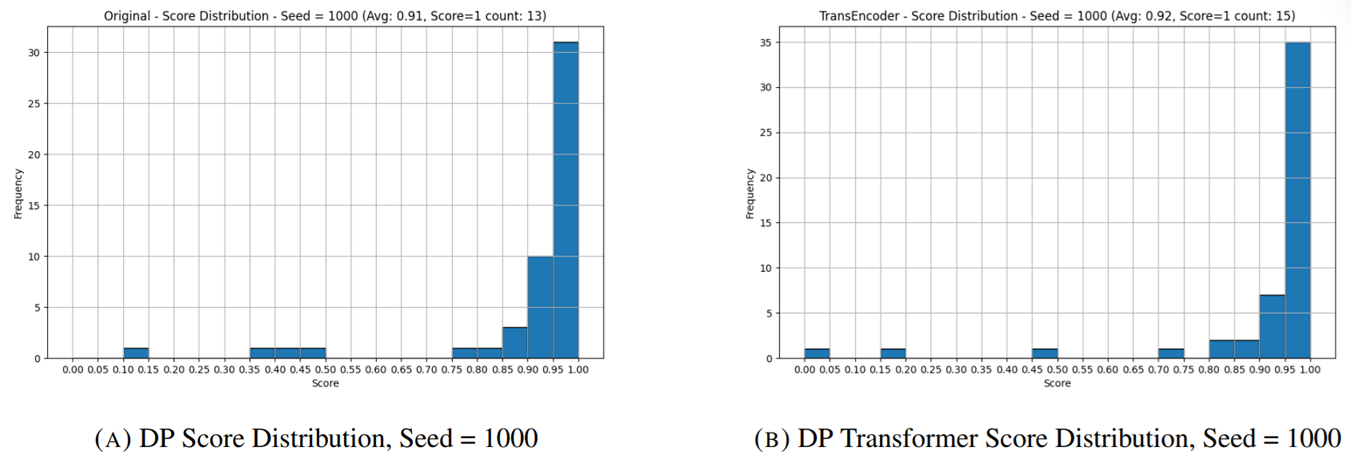

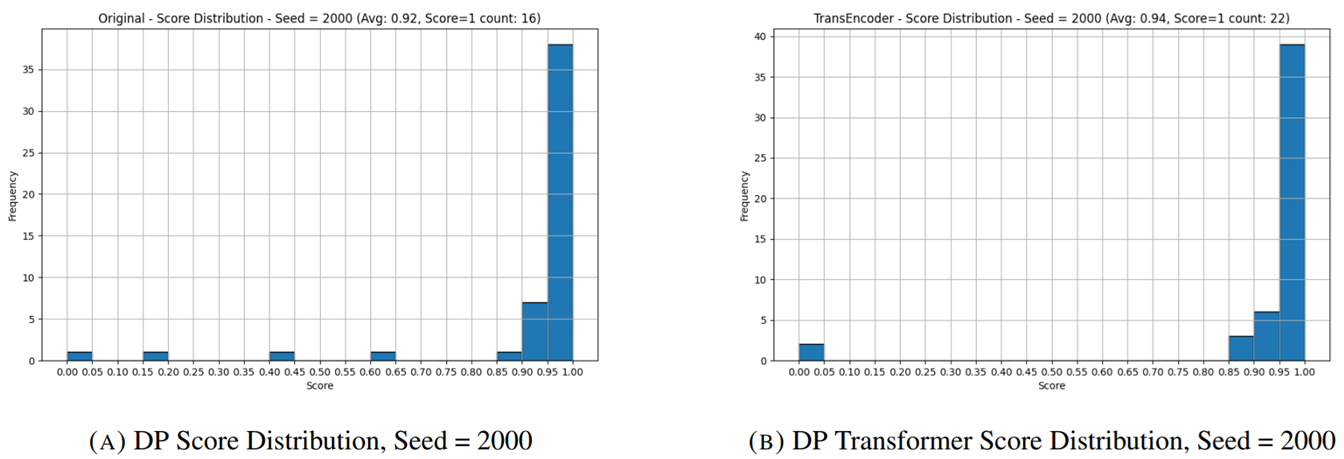

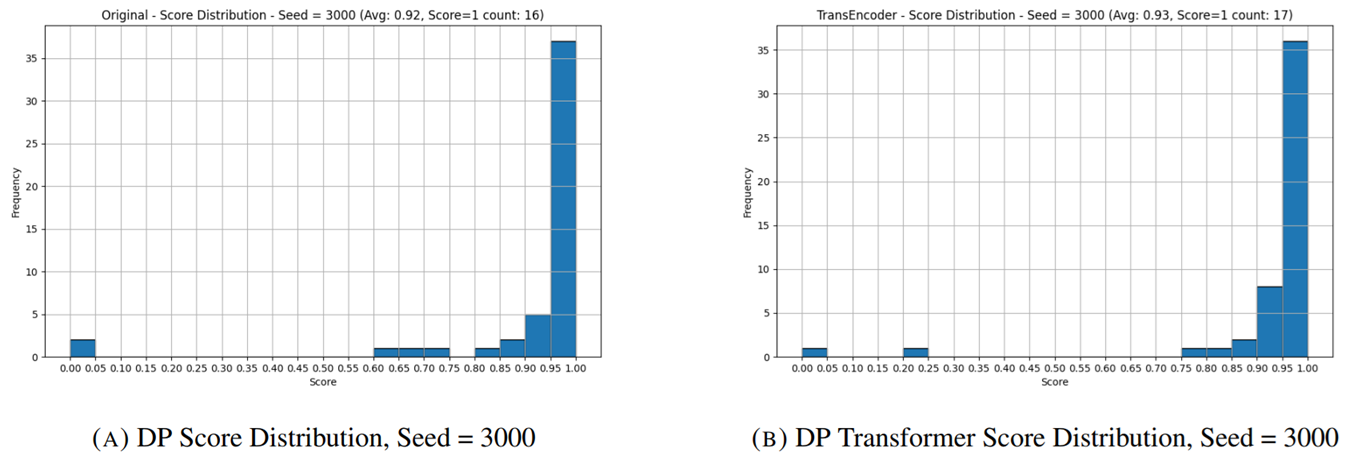

- Score Distribution Analysis: The DP Transformer achieved a higher frequency of perfect scores (1.0) while reducing occurrences of poor performance (scores below 0.5) compared to DP (Figures 6a and 6b). This consistency across seeds demonstrates the reliability of the enhanced architecture.

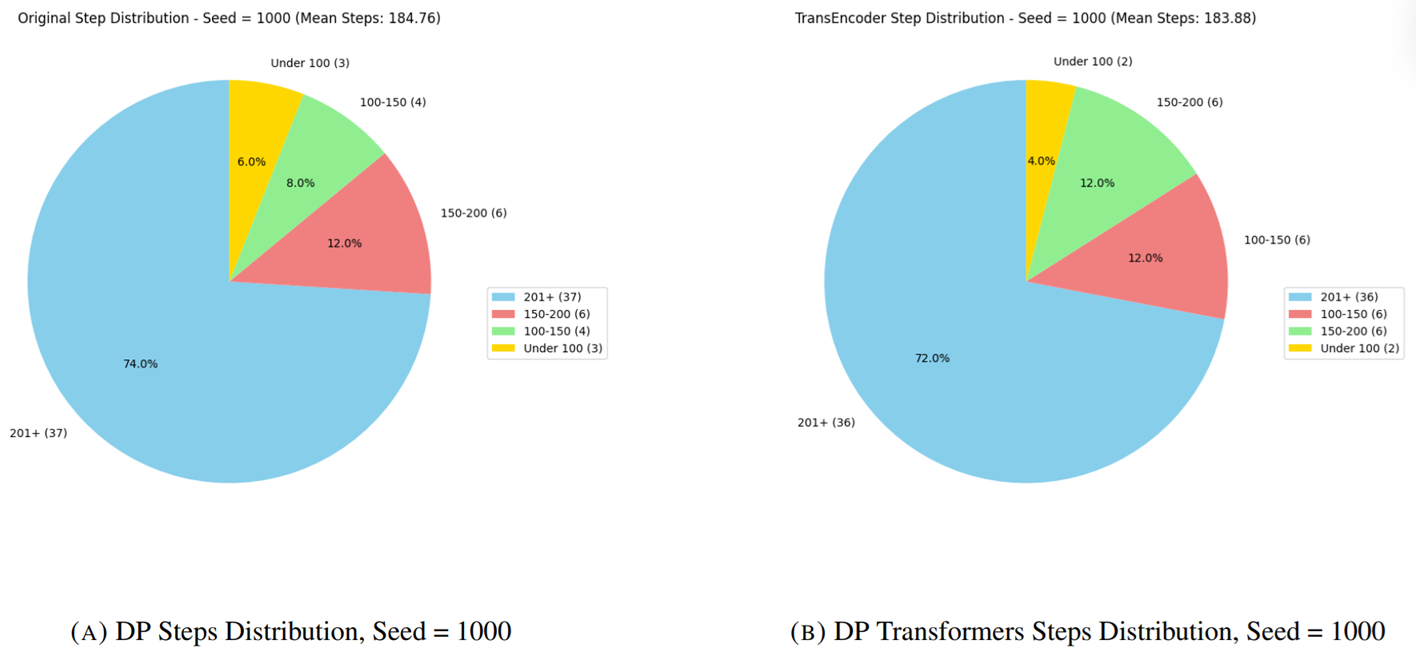

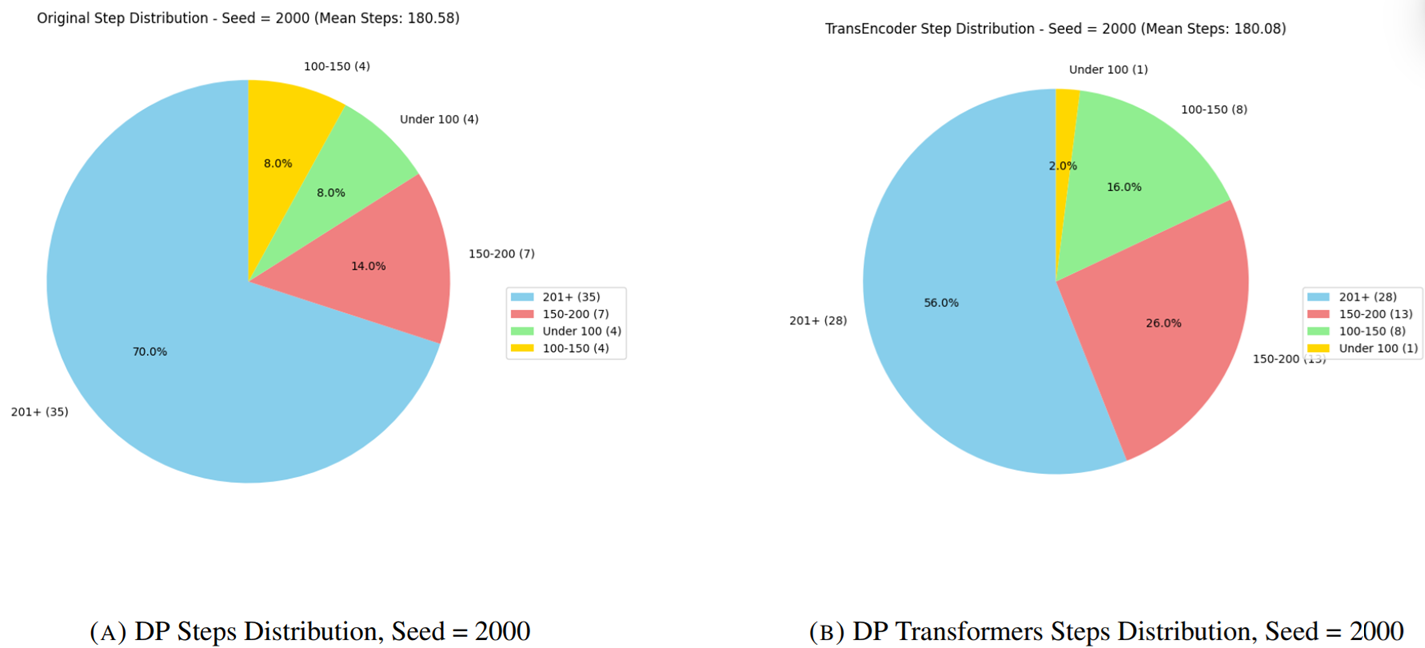

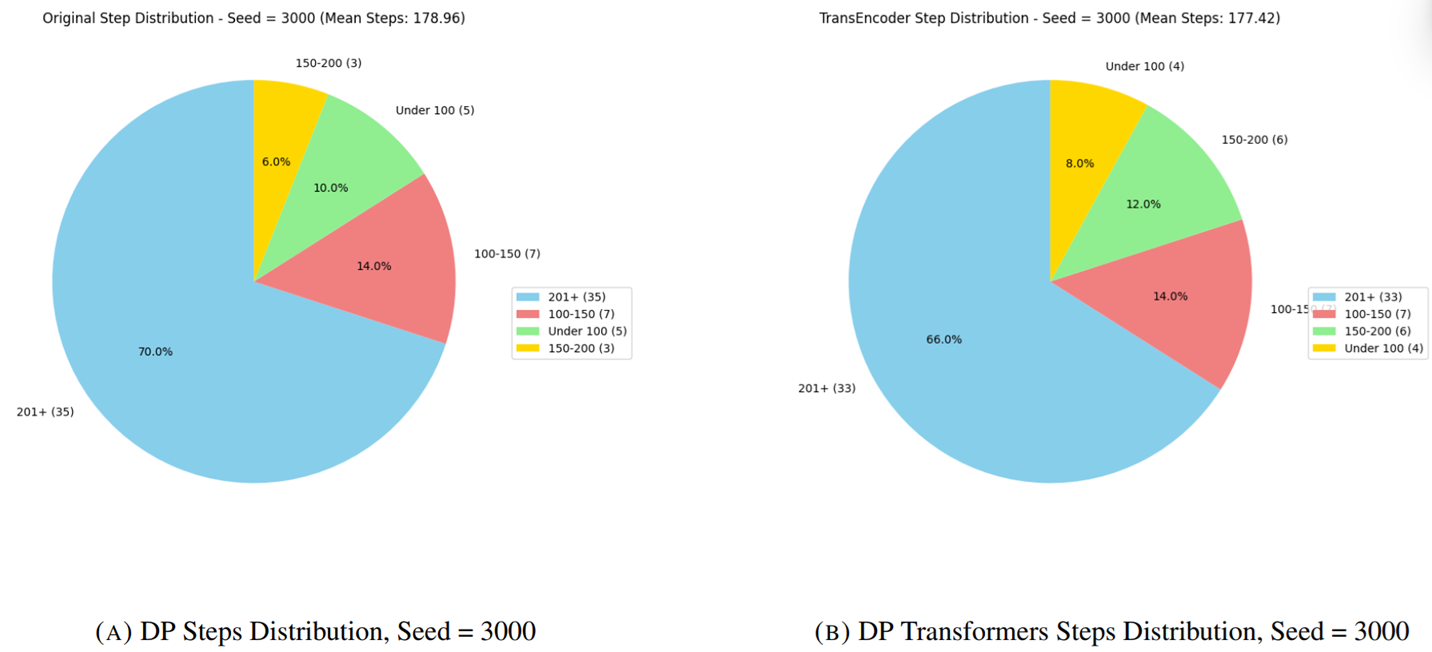

- Step Efficiency: The DP Transformer required fewer mean steps to complete tasks (183.88) compared to the original DP (184.76). Additionally, the DP Transformer showed fewer instances of exceeding the maximum step limit (200), further highlighting its improved task efficiency (Figures 7a and 7b). Performance between 100 and 150 steps was comparable for both models, but the DP Transformer showed better results for tasks requiring 150–200 steps.

- Robustness Analysis: Across all evaluated seeds, the DP Transformer exhibited lower variance in both scores and steps, indicating improved consistency across varying initialization conditions.

Computational Efficiency: The enhanced DP Transformer model required an average of 60 additional seconds for training compared to the original DP, when evaluated across three random seeds (1000, 2000, 3000). Despite the added complexity of the Transformer encoders, the inference time for the DP Transformer remained comparable to that of the original DP, as shown in the video. This indicates that the architectural enhancements do not compromise real-time deployment performance.

These results highlight that the Transformer-based observation and action encoders not only maintain the performance of the original Diffusion Policy but also deliver significant improvements in training stability, inference consistency, and task efficiency.

Conclusion

The implementation of a structured checkpoint saving and loading system significantly enhanced the reproducibility of experiments, while the use of comprehensive evaluation metrics across different seeds provided deeper insights into the stability of the model. Additionally, the 11-fold improvement in rendering resolution enabled better visualization of unique behaviors in challenging scenarios, offering a more detailed and nuanced understanding of the results.

Limitations:

- There is definitely a need for better handling of dynamic environments and unexpected scenarios.

- Integration with real robotic systems would require additional considerations for real-time performance.

- There is also a necessity to tune the hyperparameters of the transformer more carefully.

- Fixed observation horizon might limit the model’s ability to capture more extended temporal patterns.

Future Work:

- Investigation of more efficient network architectures to reduce training overhead.

- Implementation of data augmentation techniques for better generalization.

- Develop more robust evaluation metrics for real-world scenarios.

References

[1]Chi et al. (2023). Diffusion Policy: Visuomotor Policy Learning via Action Diffusion. In Robotics: Science and Systems (RSS) 2023.

[2] Vaswani et al. (2017). Attention Is All You Need. In Advances in Neural Information Processing Systems (NeurIPS), Vol. 30.

Appendices

Additional Training Comparisons

Additional Inference Comparisons

Supplementary materials, such as the detailed report, and extended algorithms, are available upon request.